Recently, I've been feeling that the world is becoming increasingly science fiction-like. Three months ago, I thought GPT was just a sensational headline on self-media, merely a probabilistic model that could converse with humans. But in the past month, I've delved into GPT's capabilities and have been repeatedly amazed by its abilities. However, its intelligence in many areas is quite superficial, making me feel that its value is still limited. Therefore, in my applications, I position GPT as a secretary that can help with document organization or enhance my thinking depth and breadth through some simple questions and challenges.

A recent event changed my perspective. Due to product needs, I had to create a text editor that interacts not through keyboard input but through direct natural language dialogue. For example, users can say, "Insert 'What a lovely day today' after the third line," or "Replace the content of the sixth line with 'What a lovely day today'," and it will do so. The core challenge of this text editor is not editing text but translating human natural language into machine-understandable language. This is similar to translating English to Chinese and requires deep machine learning knowledge. Three months ago, with my limited NLP experience, I would have used a BERT-based machine translation model, generated a lot of training data, fine-tuned it on expensive GPUs, and finally deployed it to a production environment. This process requires not only machine learning experience but also practical engineering experience, as BERT is a large model and runs slowly. To avoid making users wait, we would need to perform complex engineering optimizations such as distillation and quantization. The whole process could easily take a week.

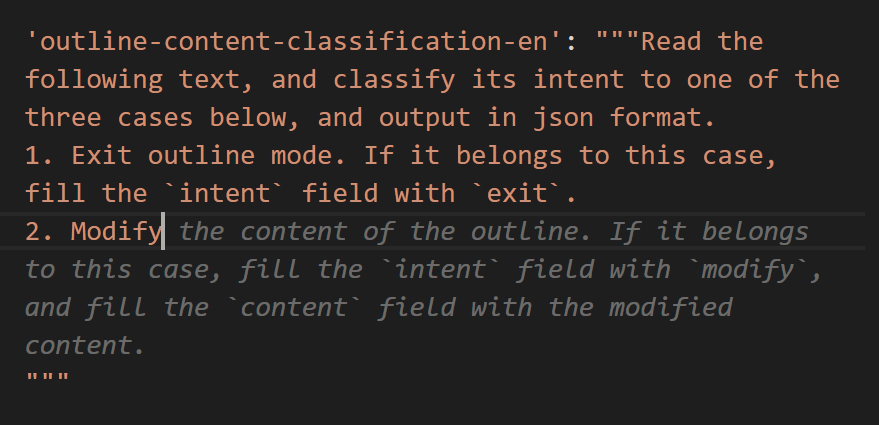

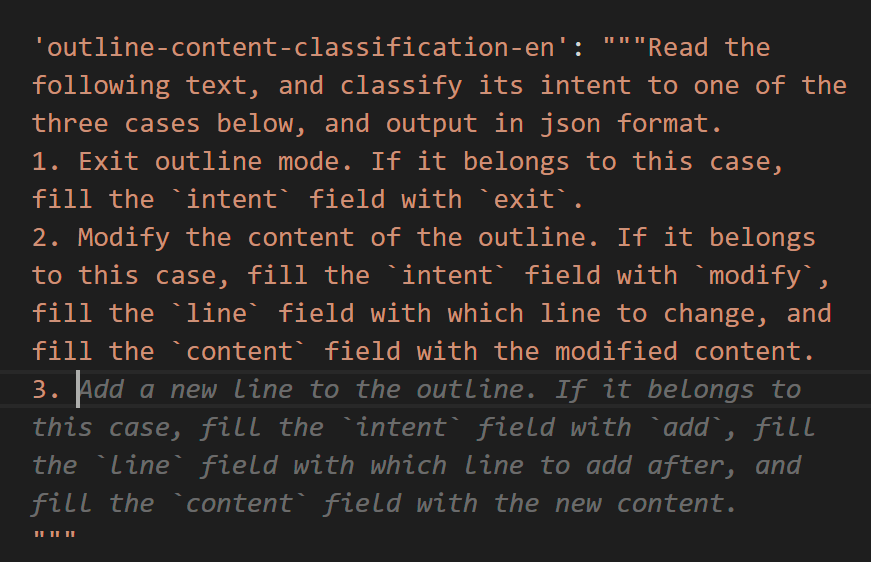

However, GPT has revolutionized all of this. Now, we don't need any machine learning experience; we only need to call OpenAI's API and know how to talk to GPT. Specifically, we need to describe the task to GPT. My prompt to it was something like this: "Please read the following text, classify it into one of the three situations below, and output the corresponding JSON. In the first situation, the text intends to exit draft mode, so you need to set the 'intent' field to 'exit.' In the second situation, the user wants to append a phrase to the end of a certain line, so you need to set 'intent' to 'append,' 'content' to the phrase, and 'line' to the line number. In the third situation, if the user wants to change a certain line to their desired content, you need to set 'intent' to 'modify,' 'content' to the modified content, and 'line' to the line number." I then provided some examples, and it was able to output perfectly! What used to take a week can now be done in just ten minutes, an acceleration of 100 or even 1,000 times. More importantly, GPT not only simplifies the development process and improves efficiency but also lowers the entry barrier. Previously, it might have taken someone with a master's or doctoral degree and extensive engineering experience to get a model running within a week. But now, anyone, even a middle school student, who can describe the problem and knows how to use JSON format can implement this function by speaking with GPT in natural language, even not necessarily in English.

But it doesn't end there. The thing we use to talk to GPT is called a prompt, and finding the right prompt is a skill in itself. In my case, I described it as a classification problem selecting one of the three situations and asked it to output in JSON format while providing some practical examples. These are some practical techniques to maximize the chances of getting the desired result. These techniques need to be learned gradually in daily work. Therefore, prompt engineering is still a skill that cannot be easily replaced.

An interesting perspective is the idea of a "cyber nesting doll": since GPT can help you write code and develop models, why not let GPT help with prompt engineering as well? Recently, I've been trying out GitHub's Copilot service, a GPT-based intelligent auto-completion service that guesses what you want to do based on your current code and automatically completes large chunks of code and comments. In my case, my prompt is essentially a string, so it doesn't have a code structure and is purely natural language. But when I started typing the second point, "Modify a previous line," Copilot completed the entire content for me, and I just pressed the tab key to accept its suggestion.

Then, when I typed "3." for the third point, it completed that as well. This is quite terrifying, as it means my hard-earned prompt engineering skills are now rendered obsolete. GPT not only replaces my need to develop models but also my need to do prompt engineering. So, the real human contribution here is just spell out the first sentence and then pressing the tab key. Even the subsequent examples can be fully completed by GPT. The entire model took me only two minutes from start to finish. Why two minutes and not one? Because I made a mistake in the examples by using single quotes instead of double quotes in the JSON, causing the output to be unparseable by Python, making me the weak link. If we only count pressing the tab key, it actually took just one minute from start to finish. This increases the speed and efficiency tenfold and further lowers the barrier to entry.

In summary, this is a truly astonishing development because it not only increases efficiency by hundreds or thousands of times but also lowers the barriers to entry by the same magnitude. From a positive perspective, human time and energy can be greatly liberated, allowing us to focus on leisure or genuinely creative endeavors. However, from another perspective, this also means that humans may become even more competitive, as it's unclear whether there will be enough positions in the future for so many people to do these tasks.

Of course, this example is biased since GPT is an NLP model, making it particularly well-suited for NLP tasks. If you asked it to do something else, like judging image content and outputting JSON, it might not be as easy. That said, the multimodal GPT-4 is already being tested, although not yet released, so perhaps image processing will be conquered in the near future. But the order-of-magnitude reduction in barriers still shocks me. While using Copilot, I'm regularly surprised because it seems to read my mind. Often, after typing only a few letters, it completes long functions for me. I used to think this was merely memorization, but as my experience grows, I'm increasingly convinced that it has some degree of generalization capability. I highly recommend everyone try Copilot; it was free for the first two months. I believe it has already increased my coding efficiency by about five times. Combined with GPT, this single model has saved me a week's worth of time. So today, I am truly amazed.

(This 1,200-word article was generated using the natural language dialogue editor mentioned above to create an outline, and then GPT organized the content after a 10-minute dictation.)

Update:

Today, I suddenly realized a problem: as an engineer, while designing this system, I might have had a mindset that the backend of this text editor must be a piece of code. So, we first need to understand the user's intention, translate it into a language the machine can understand, and then let the machine handle it entirely. However, this could be a misconception. If I didn't know programming at all, I might communicate directly with GPT, telling it that this is a piece of text and this is a command, asking it to apply the command to the text and return the result, instead of converting it into JSON first and then using a computer to process the text.

From this perspective, my previous expertise might have become a burden, turning into a "curse of knowledge" and reducing my coding efficiency. In other words, if I didn't know programming, I might be able to create a product like this with GPT within two minutes using this approach, but because I know programming, I would think of converting it into JSON first and then into specific text, which would take an hour instead. Moreover, this method has the advantage of not only lowering the entry barrier and increasing efficiency but also allowing for very flexible functionality without prior specification. For example, you can say, "Delete the first three lines entirely" or "Expand the second line into a 100-word short article." This brings greater flexibility but also the potential for abuse, so some protective measures need to be implemented in the engineering process.

However, is GPT truly intelligent enough to support such work entirely? To verify this, I conducted some experiments, implementing this functionality in a very short time and testing it with various test cases. I found two significant issues: first, when using GPT-3.5, it often isn't smart enough, possibly missing some words or outputting keywords from examples without modification, appearing clumsy. So, its intelligence seems insufficient to support such an application. However, GPT-4 performs much better, not having these issues in many cases, but its latency is long, and the user experience is terrible. Of course, I believe this issue will be resolved over time.

In the end, I didn't adopt this approach but returned to the previous JSON-based solution. The main reason is that its stability still isn't good enough. For example, when I say, "Delete the first two lines," it might only delete the first line; or when I say, "Replace the third line with 12345," it replaces it with 1234. In other words, it is not as precise as a computer in terms of output control. To put it differently, from an engineering trade-off perspective, we want to strike a balance between flexibility, controllability, and engineering costs. Currently, GPT's fine control over output as a language model is still its weakness. From this viewpoint, using JSON as an intermediate layer to first understand the user's intention and then implementing it with a computer may be a sweet spot among the three factors. However, as GPT's capabilities develop in the future, we may increasingly lean towards intelligence, where someone who doesn't know programming might write faster than someone who does, with the same result. This might foreshadow our future tasks as coders, much like Zhang Wuji learning Tai Chi, mainly involving forgetting previous knowledge and continuously embracing new domains.

Comments